Image by Author

Quite a bold statement! Claiming I can guarantee someone you’ll land a job, that is.

OK, the truth is, nothing in life is guaranteed, especially finding a job. Not even in data science. But what will get you veeeery, very close to the guarantee is having data projects in your portfolio.

Why do I think projects are so decisive? Because, if chosen wisely, they most effectively showcase the range and depth of your technical data science skills. The quality of projects counts, not their number. They should cover as many as possible data science skills.

So, which projects guarantee you that on the lowest number of projects? If limited to doing only three projects, I would select these.

But don’t take it too literally. The message here is not that you should stick strictly to those three. I selected them because they cover most of the technical skills required in data science. If you want to do some other data science projects, feel free to do so. But if you’re limited with time/number of projects, choose them wisely and select those that will test the widest array of data science skills.

Speaking of which, let’s make clear what they are.

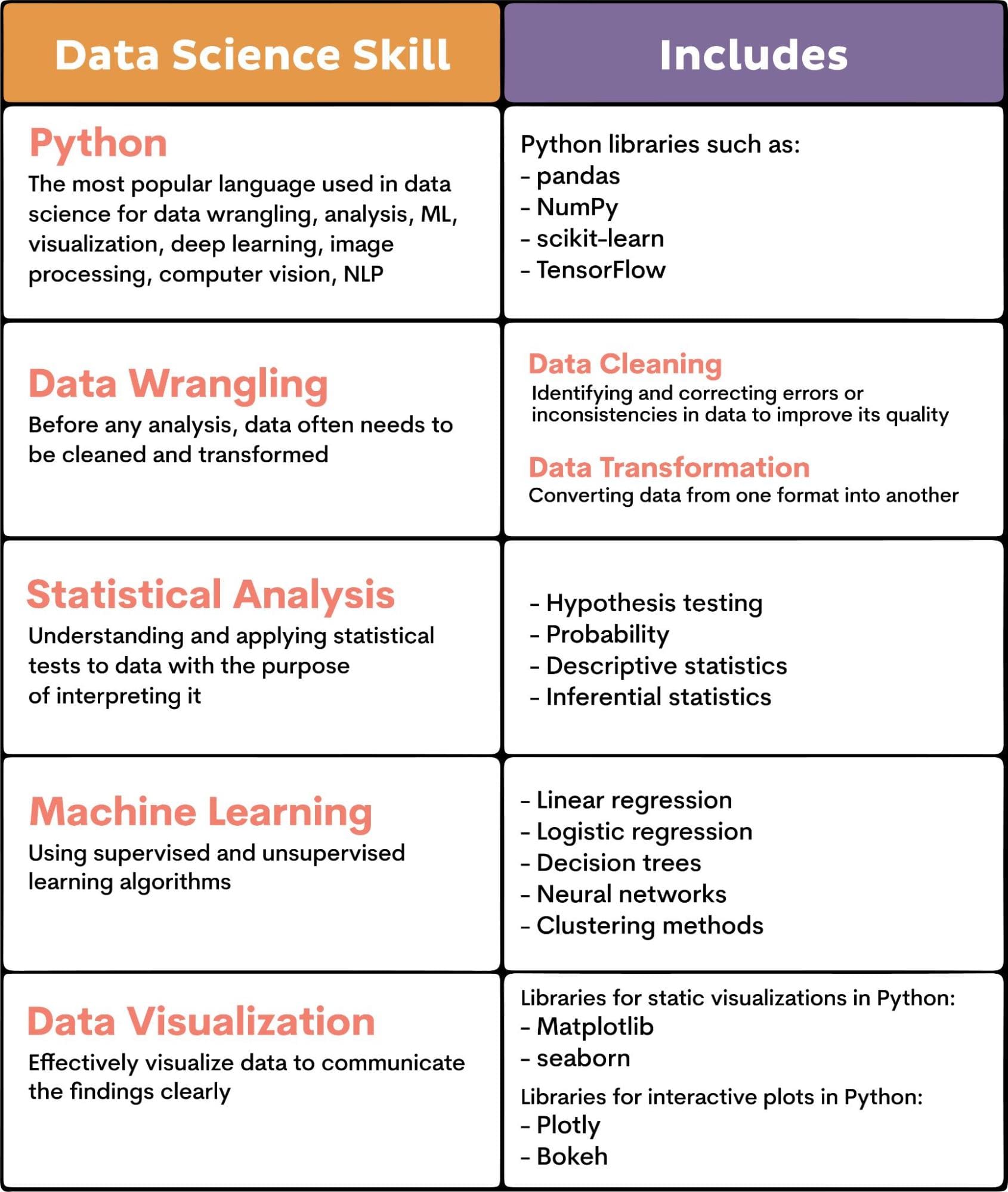

There are five fundamental skills in data science.

- Python

- Data Wrangling

- Statistical Analysis

- Machine Learning

- Data Visualization

This is a checklist you should consider when trying to get the maximum from the data science projects you choose.

Here’s an overview of what these skills encompass.

Of course, there’s much more to data science skills. They also include knowing SQL and R, big data technologies, deep learning, natural language processing, and cloud computing.

However, the need for them heavily depends on the job description. But the fundamental five skills I mentioned, you can’t do without.

Let’s now take a look at how the three data science projects I chose challenge these skills.

Some of these projects might be a little too advanced for some. In that case, give these 19 data science projects for beginners a try.

1. Understanding City Supply and Demand: Business Analysis

Source: Insights from City Supply and Demand Data

Topic: Business Analysis

Brief Overview: Cities are hubs of demand and supply interactions for Uber. Analyzing these can offer insights into the company’s business and planning. Uber gives you a dataset with details about trips. You need to answer eleven questions to give a business insight on trips, their time, demand for drivers, etc.

Project Execution: You’re given eleven questions which have to be answered in the displayed order. Answering them will involve tasks such as

- Filling in the missing values,

- Aggregating data,

- Finding the largest values,

- Parsing time interval,

- Calculating percentages,

- Calculating weighted averages,

- Finding differences,

- Visualizing data, and so on.

Skills Showcased: Exploratory data analysis (EDA) for selecting needed columns and filling in the missing values, deriving actionable insights about completed trips (different periods, weighted average ratio of trips per driver, finding the busiest hours to help draft a driver schedule, the relationship between supply and demand, etc.), visualizing the relationship between supply and demand.

2. Customer Churn Prediction: A Classification Task

Source: Customer Churn Prediction

Topic: Supervised learning (classification)

Brief Overview: In this data science project, Sony Research gives you a dataset of a telecom company’s customers. They expect you to perform exploratory analysis and extract insights. Then you’ll have to build a churn prediction model, evaluate it and discuss the issues when deploying the model into production.

Project Execution: The project should be approached in these major phases.

- Exploratory Analysis and Extracting Insights

-

- Check data fundamentals (nulls, uniqueness)

- Choose data you need and form your dataset

- Visualize data to check the distribution of the values

- Form a correlation matrix

- Check the feature importances

-

- Use sklearn to split the dataset into training and testing using the 80%-20% ratio

-

- Apply classifiers and pick one to use in production based on the performance

-

- Use accuracy and F1 score while comparing the performance of different algorithms

-

- Use classical ML models

- Visualize the Decision Tree and see how tree-based algorithms perform

-

- Try Artificial Neural Network (ANN) on this problem

-

- Monitor the model performance to avoid data drift and concept drift

Skills Showcased: Exploratory data analysis (EDA) and data wrangling to check for nulls, data uniqueness, deriving insights about the distribution of data, and positive and negative correlations; data visualization in histograms and correlation matrix; applying ML classifiers using the sklearn library, measuring algorithms accuracy and F1 score, comparing the algorithms, visualizing decision tree; using Artificial Neural Network to see how deep learning performs; model deploying where you need to be aware of data drifting and concept drifting problems in the MLOps cycle.

3. Predictive Policing: Examining the Implications

Source: The Perils of Predictive Policing

Topic: Supervised learning (regression)

Brief Overview: This predictive policing utilizes algorithms and data analytics to predict where crimes are likely to happen. Your chosen approach can have profound ethical and societal implications. It uses the 2016 City of San Francisco crime data from its open data initiative. The project will attempt to predict the number of crime incidents in a given zip code on a certain day of the week and time of day.

Project Execution: Here are the main steps the project author has undertaken.

- Selecting the variables and calculating the total number of crimes per year per zip code per hour

- Train/test split data chronologically

- Trying five regression algorithms:

-

- Linear regression

- Random Forest

- K-Nearest Neighbors

- XGBoost

- Multilayer Perceptron

Skills Showcased: Exploratory data analysis (EDA) and data wrangling where you end up with the data about crimes, hour, day of the week, and zip code; ML (supervised learning/regression) where you try how linear regression, random forest regressor, K-nearest neighbor, XGBoost are performing; deep learning where you use multilayer perceptron to try to explain the results you get; deriving insights on the crime prediction and its possibility to be misused; deploying model into an interactive map.

If you want to do more projects using similar skills, here are 30+ ML project ideas.

By completing these data science projects, you will test and acquire essential data science skills, such as data wrangling, data visualization, statistical analysis, building and deploying ML models.

Speaking of ML, I focused here on supervised learning as this is more commonly used in data science. I can almost guarantee you that these data science projects will be enough to land you a desired job.

But you should read the job description carefully. If you see that it requires unsupervised learning, NLP, or something else I didn’t cover here, include such a project or two in your portfolio.

No matter what, you’re still not stuck with only three projects. They are here to guide you on how to choose your projects that will guarantee you landing a job. Be mindful of the projects’ complexity, as they should cover fundamental data science skills extensively.

Now, off you go and land that job!

Nate Rosidi is a data scientist and in product strategy. He’s also an adjunct professor teaching analytics, and is the founder of StrataScratch, a platform helping data scientists prepare for their interviews with real interview questions from top companies. Connect with him on Twitter: StrataScratch or LinkedIn.