LLM hallucination detection challenges and a possible solution presented in a prominent research paper

Recently, large language models (LLMs) have shown impressive and increasing capabilities, including generating highly fluent and convincing responses to user prompts. However, LLMs are known for their ability to generate non-factual or nonsensical statements, more commonly known as “hallucinations.” This characteristic can undermine trust in many scenarios where factuality is required, such as summarization tasks, generative question answering, and dialogue generations.

Detecting hallucinations has always been challenging among humans, which remains true in the context of LLMs. This is especially challenging, considering we usually don’t have access to ground truth context for consistency checks. Additional information on the LLM’s generations, like the output probability distributions, can help with this task. Still, it is often the case where this type of information is unavailable, making the task even more difficult.

Hallucination detection has yet to be solved and is an active area of research. In this blog post, we’ll present the task in general and its challenges and one possible approach published in the research paper SELFCHECKGPT: Zero-Resource Black-Box Hallucination Detection for Generative Large Language Models[1]. We will illustrate some of the approaches presented in the paper with real examples, pointing out some pros and cons of each method. You can review the examples yourself by going to this Google Colab Notebook.

This blog will cover:

- What Is LLM Hallucination

- The Approach: SelfCheckGPT

- Consistency Check

1. BERTScore

2. Natural Language Inference

3. LLM Prompt - Experiments

- Conclusion

- References

What Is LLM Hallucination

In natural language generation, hallucination can be defined as “generated content that is nonsensical or unfaithful to the provided source content”[2]. The source content can represent different functions for different tasks. For example, for summarization tasks, the source is the input text being summarized, while for generative question-answering tasks, the source is considered the world knowledge[2]. Let’s focus on the question-answering task and take a look at one example of a user question and the answer given by OpenAI’s GPT model (text-davinci-003):

User Prompt: Who was Philip Hayworth?

LLM Response: Philip Hayworth was an English barrister and politician who served as Member of Parliament for Thetford from 1859 to 1868.

At first sight, that looks like a coherent response, but after a while, we might ask ourselves a very reasonable question: is it true?

We don’t have much information to answer our new question, though. We don’t have a context with proven facts we can use to verify the statement. LLMs are typically trained under a massive corpora of texts, and it can be hard to verify if the statement is grounded in a particular source of information. A quick Google search does not show any hits for a person matching the response. What exactly constitutes a hallucination is still not well established, but this example could be understandably considered a hallucination because the subject with this name, location, and employment may not have existed at all.

Let’s try this again with another question:

User Prompt: Who is Bill Gates?

LLM Response: Bill Gates is a technology entrepreneur, investor, and philanthropist. He is the co-founder of Microsoft Corporation and is one of the wealthiest people in the world.

Ok, these statements are much easier to verify and are supported by a quick check on Wikipedia, so this is unlikely to be a hallucination. During training, it is very likely that the LLM has seen a lot of statements about Bill Gates, so the following tokens after “Bill Gates is _” will likely be generated with high confidence. On the other hand, the LLM might not be sure about which words to use after “Philip Hayworth is _”. This insight allows us to link uncertainty with factuality, as factual sentences will likely contain tokens predicted with a higher probability when compared to hallucinated sentences. However, we might not have the output probability distribution at hand for a good number of cases.

The example and content of the current session was based on the original paper [1], and we will continue to explore the paper’s approach in the following sections.

The Approach: SelfCheckGPT

Throughout the last section, we considered two important considerations for our approach: access to an external context and access to the LLM’s output probability distribution. When a method does not require an external context or database to perform the consistency check, we can call it a zero-resource method. Similarly, when a method requires only the LLM’s generated text, it can be called a black-box method.

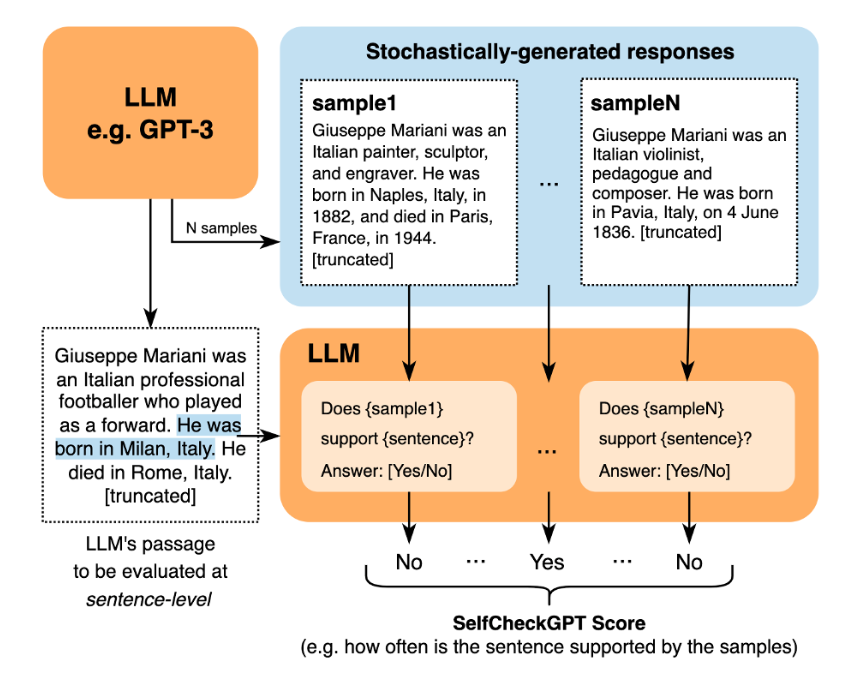

The approach we want to talk about in this blog post is a zero-resource black-box hallucination detection method and is based on the premise that sampled responses to the same prompt will likely diverge and contradict each other for hallucinated facts, and will likely be similar and consistent with each other for factual statements.

Let’s revisit the previous examples. To apply the detection method, we need more samples, so let’s ask the LLM the same question three more times:

Indeed, the answers contradict each other — at times, Philip Hayworth is a British politician, and in other samples, he is an Australian engineer or an American lawyer, who all lived and acted in different periods.

Let’s compare with the Bill Gates example:

We can observe that the occupations, organizations, and traits assigned to Bill Gates are consistent across samples, with equal or semantically similar terms being used.

Consistency Check

Now that we have multiple samples, the final step is to perform a consistency check — a way to determine whether the answers agree with each other. This can be done in a number of ways, so let’s explore some approaches presented in the paper. Feel free to execute the code yourself by checking this Google Colab Notebook.

BERTScore

An intuitive approach to perform this check is by measuring the semantic similarity between the samples, and BERTScore[3] is one way to do that. BERTScore computes a similarity score for each token in the candidate sentence with each token in the reference sentence to calculate a similarity score between the sentences.

In the context of SelfCheckGPT, the score is calculated per sentence. Each sentence of the original answer will be scored against each sentence of a given sample to find the most similar sentence. These maximum similarity scores will be averaged across all samples, resulting in a final hallucination score for each sentence in the original answer. The final score needs to tend towards 1 for dissimilar sentences and 0 for similar sentences, so we need to subtract the similarity score from 1.

Let’s show how this works with the first sentence of our original answer being checked against the first sample:

The maximum score for the first sample is 0.69. Repeating the process for the two remaining samples and assuming the other maximum scores were 0.72 and 0.72, our final score for this sentence would be 1 — (0.69+0.72+0.72)/3 = 0.29.

Using semantic similarity to verify consistency is an intuitive approach. Other encoders can be used for embedding representations, so it’s also an approach that can be further explored.

Natural Language Inference

Natural language inference is the task of determining entailment, that is, whether a hypothesis is true, false, or undetermined based on a premise[4]. In our case, each sample is used as the premise and each sentence of the original answer is used as our hypothesis. The scores across samples are averaged for each sentence to obtain the final score. The entailment is performed with a Deberta model fine-tuned to the Multi-NLI dataset[5]. We’ll use the normalized prediction probability instead of the actual classes, such as “entailment” or “contradiction,” to compute the scores.[6]

The entailment task is closer to our goal of consistency checking, so we can expect that a model fine-tuned for that purpose will perform well. The author also publicly shared the model on HuggingFace, and other NLI models are publicly available, making this approach very accessible.

LLM Prompt

Considering we already use LLMs to generate the answers and samples, we might as well use an LLM to perform the consistency check. We can query the LLM for a consistency check for each original sentence and each sample as our context. The image below, taken from the original paper’s repository, illustrates how this is done:

The final score can be computed by assigning 1 to “No”, 0 to “Yes”, 0.5 for N/A, and averaging the values across samples.

Unlike the other two approaches, this one incurs extra calls to the LLM of your choice, meaning additional latency and, possibly, additional costs. On the other hand, we can leverage the LLM’s capabilities to help us perform this check.

Experiments

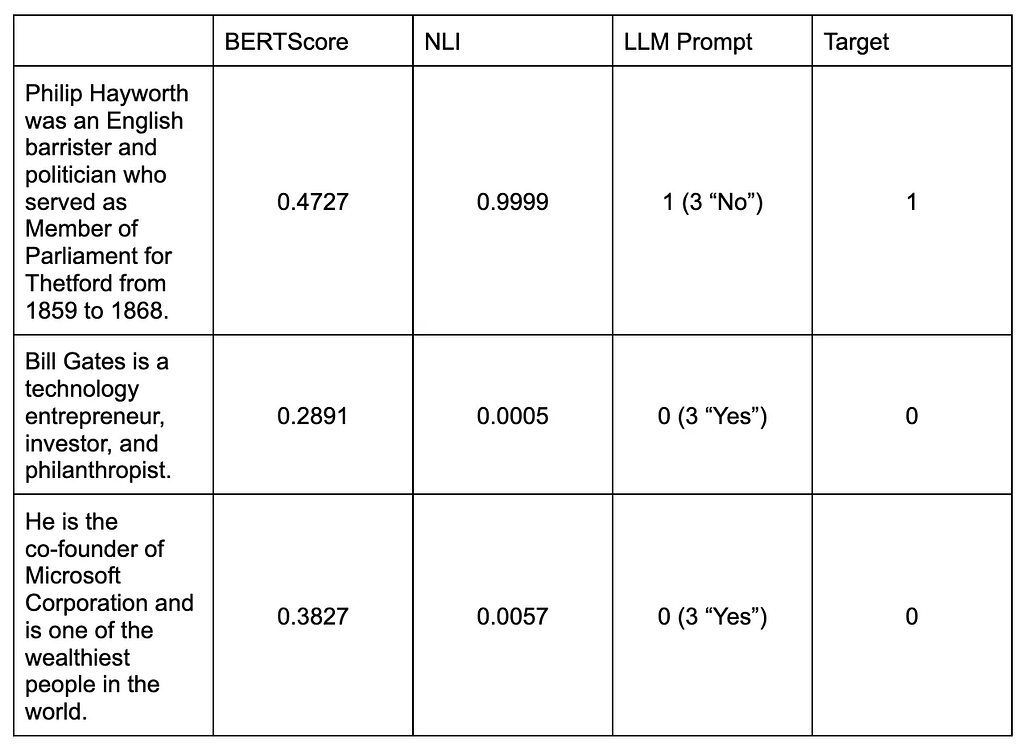

Let’s see what we get as results for the two examples we’ve been discussing for each of the three approaches.

These values are solely meant to illustrate the method. With only three sentences, it’s not supposed to be a means to compare and determine which approach is best. For that purpose, the original paper shares the experimental results on the paper’s repository here, which includes additional versions that weren’t discussed in this blog post. I won’t go into the details of the results, but by all three metrics (NonFact, Factual, and Ranking), the LLM-Prompt is the best-performing version, closely followed by the NLI version. The BERTScore version looks to be considerably worse than the remaining two. Our simple examples seem to follow along the lines of the shared results.

Conclusion

We hope this blog post helped explain the hallucination problem and provides one possible solution for hallucination detection. This is a relatively new problem, and it’s good to see that efforts are being made towards solving it.

The discussed approach has the advantage of not requiring external context (zero-resource) and also not requiring the LLM’s output probability distribution (black-box). However, this comes with a cost: in addition to the original response, we need to generate extra samples to perform the consistency check, increasing latency and cost. The consistency check will also require additional computation and language models for encoding the responses into embeddings, performing textual entailment, or querying the LLM, depending on the chosen method.

References

[1] — Manakul, Potsawee, Adian Liusie, and Mark JF Gales. “Selfcheckgpt: Zero-resource black-box hallucination detection for generative large language models.” arXiv preprint arXiv:2303.08896 (2023).

[2] — JI, Ziwei et al. Survey of hallucination in natural language generation. ACM Computing Surveys, v. 55, n. 12, p. 1–38, 2023.

[3] — ZHANG, Tianyi et al. Bertscore: Evaluating text generation with bert. arXiv preprint arXiv:1904.09675, 2019.

[4] — https://nlpprogress.com/english/natural_language_inference.html

[5] — Williams, A., Nangia, N., & Bowman, S. R. (2017). A broad-coverage challenge corpus for sentence understanding through inference. arXiv preprint arXiv:1704.05426.

[6] — https://github.com/potsawee/selfcheckgpt/tree/main#selfcheckgpt-usage-nli

Understanding and Mitigating LLM Hallucinations was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.