Image by Author

Large Language Models have revolutionized the Natural Language Processing field, offering unprecedented capabilities in tasks like language translation, sentiment analysis, and text generation.

However, training such models is both time-consuming and expensive. This is why fine-tuning has become a crucial step for tailoring these advanced algorithms to specific tasks or domains.

Just to make sure we are on the same page, we need to recall two concepts:

- Pre-trained language models

- Fine-tuning

So let’s break down these two concepts.

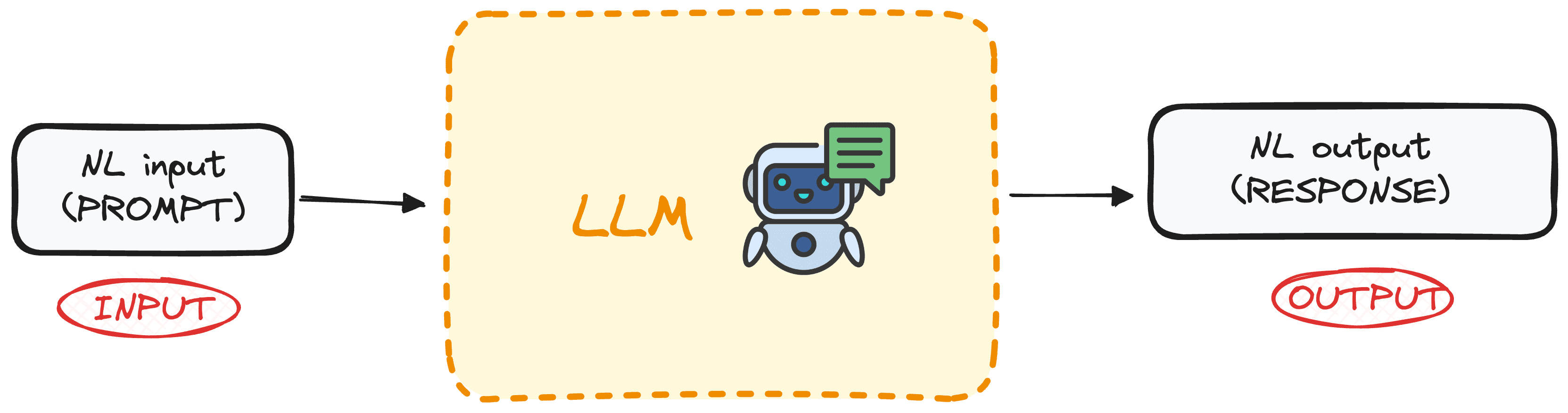

What is a Pre-trained Large Language Model?

LLMs are a specific category of Machine Learning meant to predict the next word in a sequence based on the context provided by the previous words. These models are based on the Transformers architecture and are trained on extensive text data, enabling them to understand and generate human-like text.

The best part of this new technology is its democratization, as most of these models are under open-source license or are accessible through APIs at low costs.

Image by Author

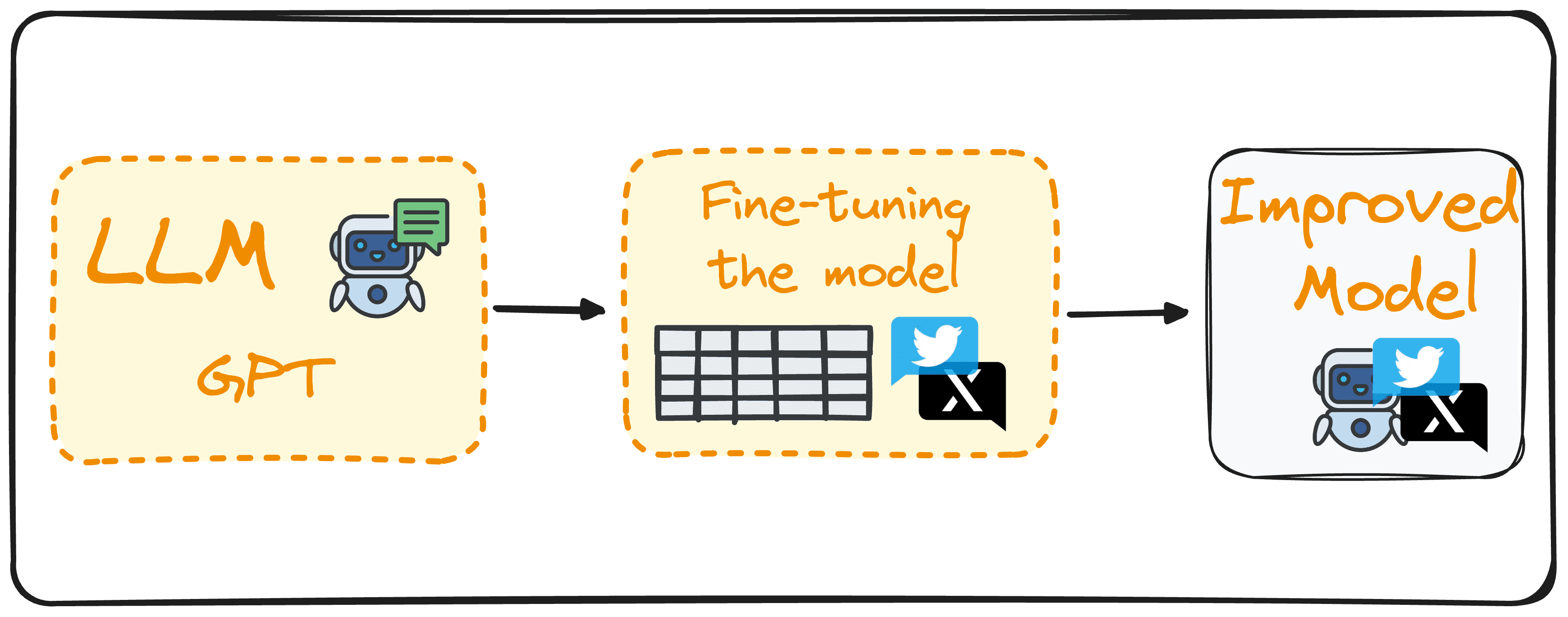

What is Fine-tuning?

Fine-tuning involves using a Large Language Model as a base and further training it with a domain-based dataset to enhance its performance on specific tasks.

Let’s take as an example a model to detect sentiment out of tweets. Instead of creating a new model from scratch, we could take advantage of the natural language capabilities of GPT-3 and further train it with a data set of tweets labeled with their corresponding sentiment.

This would improve this model in our specific task of detecting sentiments out of tweets.

This process reduces computational costs, eliminates the need to develop new models from scratch and makes them more effective for real-world applications tailored to specific needs and goals.

Image by Author

So now that we know the basics, you can learn how to fine-tune your model following these 7 steps.

Various Approaches to Fine-tuning

Fine-tuning can be implemented in different ways, each tailored to specific objectives and focuses.

Supervised Fine-tuning

This common method involves training the model on a labeled dataset relevant to a specific task, like text classification or named entity recognition. For example, a model could be trained on texts labeled with sentiments for sentiment analysis tasks.

Few-shot Learning

In situations where it’s not feasible to gather a large labeled dataset, few-shot learning comes into play. This method uses only a few examples to give the model a context of the task, thus bypassing the need for extensive fine-tuning.

Transfer Learning

While all fine-tuning is a form of transfer learning, this specific category is designed to enable a model to tackle a task different from its initial training. It utilizes the broad knowledge acquired from a general dataset and applies it to a more specialized or related task.

Domain-specific Fine-tuning

This approach focuses on preparing the model to comprehend and generate text for a specific industry or domain. By fine-tuning the model on text from a targeted domain, it gains better context and expertise in domain-specific tasks. For instance, a model might be trained on medical records to tailor a chatbot specifically for a medical application.

Best Practices for Effective Fine-tuning

To perform a successful fine-tuning, some key practices need to be considered.

Data Quality and Quantity

The performance of a model during fine-tuning greatly depends on the quality of the dataset used. Always keep in mind:

Garbage in, garbage out.

Therefore, it’s crucial to use clean, relevant, and adequately large datasets for training.

Hyperparameter Tuning

Fine-tuning is an iterative process that often requires adjustments. Experiment with different learning rates, batch sizes, and training durations to find the optimal configuration for your project.

Precise tuning is essential to efficient learning and adapting to new data, helping to avoid overfitting.

Regular Evaluation

Continuously monitor the model’s performance throughout the training process using a separate validation dataset.

This regular evaluation helps track how well the model is performing on the intended task and checks for any signs of overfitting. Adjustments should be made based on these evaluations to fine-tune the model’s performance effectively.

Navigating Pitfalls in LLM Fine-Tuning

This process can lead to unsatisfactory outcomes if certain pitfalls are not avoided as well:

Overfitting

Training the model with a small dataset or undergoing too many epochs can lead to overfitting. This causes the model to perform well on training data but poorly on unseen data, and therefore, have a low accuracy for real-world applications.

Underfitting

It occurs when the training is too brief or the learning rate is set too low, resulting in a model that doesn’t learn the task effectively. This produces a model that does not know how to perform our specific goal.

Catastrophic Forgetting

When fine-tuning a model on a specific task, there’s a risk of the model forgetting the broad knowledge it originally had. This phenomenon, known as catastrophic forgetting, reduces the model’s effectiveness across diverse tasks, especially when considering natural language skills.

Data Leakage

Ensure that your training and validation datasets are completely separate to avoid data leakage. Overlapping datasets can falsely inflate performance metrics, giving an inaccurate measure of model effectiveness.

Final Thoughts and Future Steps

Starting the process of fine-tuning large language models presents a huge opportunity to improve the current state of models for specific tasks.

By grasping and implementing the detailed concepts, best practices, and necessary precautions, you can successfully customize these robust models to suit specific requirements, thereby fully leveraging their capabilities.

Josep Ferrer is an analytics engineer from Barcelona. He graduated in physics engineering and is currently working in the data science field applied to human mobility. He is a part-time content creator focused on data science and technology. Josep writes on all things AI, covering the application of the ongoing explosion in the field.