Image by Author

As a data scientist, you need Python for detailed data analysis, data visualization, and modeling. However, when your data is stored in a relational database, you need to use SQL (Structured Query Language) to extract and manipulate the data. But how do you integrate SQL with Python to unlock the full potential of your data?

In this tutorial, we will learn to combine the power of SQL with the flexibility of Python using SQLAlchemy and Pandas. We will learn how to connect to databases, execute SQL queries using SQLAlchemy, and analyze and visualize data using Pandas.

Install Pandas and SQLAlchemy using:

pip install pandas sqlalchemy

1. Saving the Pandas DataFrame as an SQL Table

To create the SQL table using the CSV dataset, we will:

- Create a SQLite database using the SQLAlchemy.

- Load the CSV dataset using the Pandas. The countries_poluation dataset consists of the Air Quality Index (AQI) for all countries in the world from 2017 to 2023.

- Convert all the AQI columns from object to numerical and drop row with missing values.

# Import necessary packages

import pandas as pd

import psycopg2

from sqlalchemy import create_engine

# creating the new db

engine = create_engine(

"sqlite:///kdnuggets.db")

# read the CSV dataset

data = pd.read_csv("/work/air_pollution new.csv")

col = ['2017', '2018', '2019', '2020', '2021', '2022', '2023']

for s in col:

data[s] = pd.to_numeric(data[s], errors="coerce")

data = data.dropna(subset=[s])

- Save the Pandas dataframe as a SQL table. The `to_sql` function requires a table name and the engine object.

# save the dataframe as a SQLite table

data.to_sql('countries_poluation', engine, if_exists="replace")

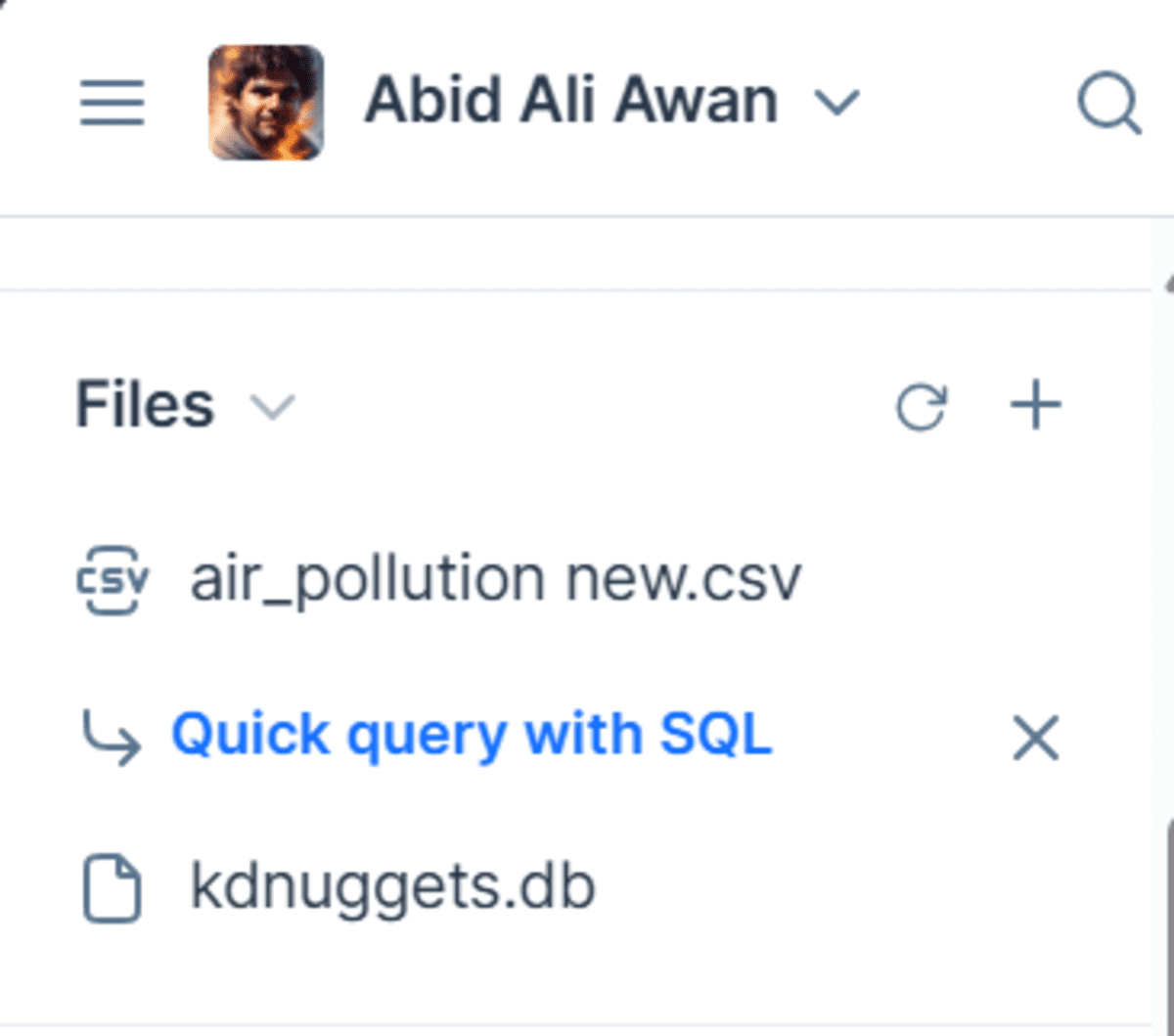

As a result, your SQLite database is saved in your file directory.

Note: I am using Deepnote for this tutorial to run the Python code seamlessly. Deepnote is a free AI Cloud Notebook that will help you quickly run any data science code.

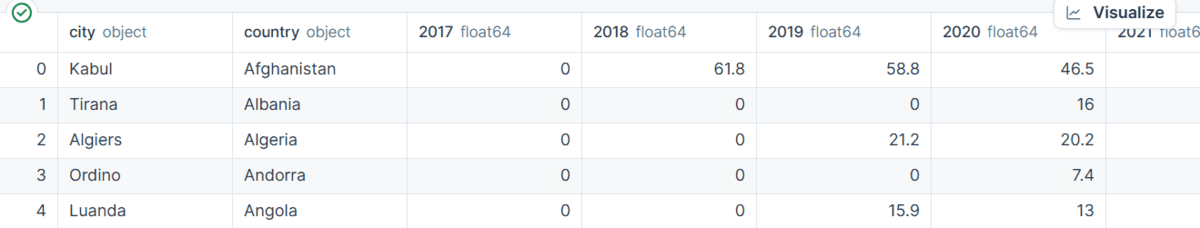

2. Loading the SQL Table using Pandas

To load the entire table from the SQL database as a Pandas dataframe, we will:

- Establish the connection with our database by providing the database URL.

- Use the `pd.read_sql_table` function to load the entire table and convert it into a Pandas dataframe. The function requires table anime, engine objects, and column names.

- Display the top 5 rows.

import pandas as pd

import psycopg2

from sqlalchemy import create_engine

# establish a connection with the database

engine = create_engine("sqlite:///kdnuggets.db")

# read the sqlite table

table_df = pd.read_sql_table(

"countries_poluation",

con=engine,

columns=['city', 'country', '2017', '2018', '2019', '2020', '2021', '2022',

'2023']

)

table_df.head()

The SQL table has been successfully loaded as a dataframe. This means that you can now use it to perform data analysis and visualization using popular Python packages such as Seaborn, Matplotlib, Scipy, Numpy, and more.

3. Running the SQL Query using Pandas

Instead of restricting ourselves to one table, we can access the entire database by using the `pd.read_sql` function. Just write a simple SQL query and provide it with the engine object.

The SQL query will display two columns from the “countries_population” table, sort it by the “2023” column, and display the top 5 results.

# read table data using sql query

sql_df = pd.read_sql(

"SELECT city,[2023] FROM countries_poluation ORDER BY [2023] DESC LIMIT 5",

con=engine

)

print(sql_df)

We got to the top 5 cities in the world with the worst air quality.

city 2023

0 Lahore 97.4

1 Hotan 95.0

2 Bhiwadi 93.3

3 Delhi (NCT) 92.7

4 Peshawar 91.9

4. Using the SQL Query Result with Pandas

We can also use the results from SQL query and perform further analysis. For example, calculate the average of the top five cities using Pandas.

average_air = sql_df['2023'].mean()

print(f"The average of top 5 cities: {average_air:.2f}")

Output:

The average of top 5 cities: 94.06

Or, create a bar plot by specifying the x and y arguments and the type of plot.

sql_df.plot(x="city",y="2023",kind = "barh");

Conclusion

The possibilities of using SQLAlchemy with Pandas are endless. You can perform simple data analysis using the SQL query, but to visualize the results or even train the machine learning model, you have to convert it into a Pandas dataframe.

In this tutorial, we have learned how to load a SQL database into Python, perform data analysis, and create visualizations. If you enjoyed this guide, you will also appreciate ‘A Guide to Working with SQLite Databases in Python‘, which provides an in-depth exploration of using Python’s built-in sqlite3 module.

Abid Ali Awan (@1abidaliawan) is a certified data scientist professional who loves building machine learning models. Currently, he is focusing on content creation and writing technical blogs on machine learning and data science technologies. Abid holds a Master’s degree in technology management and a bachelor’s degree in telecommunication engineering. His vision is to build an AI product using a graph neural network for students struggling with mental illness.