Image by Author | DALLE-3 & Canva

Missing values in real-world datasets are a common problem. This can occur for various reasons, such as missed observations, data transmission errors, sensor malfunctions, etc. We cannot simply ignore them as they can skew the results of our models. We must remove them from our analysis or handle them so our dataset is complete. Removing these values will lead to information loss, which we do not prefer. So scientists devised various ways to handle these missing values, like imputation and interpolation. People often confuse these two techniques; imputation is a more common term known to beginners. Before we proceed further, let me draw a clear boundary between these two techniques.

Imputation is basically filling the missing values with statistical measures like mean, median, or mode. It is pretty simple, but it does not take into account the trend of the dataset. However, interpolation estimates the value of missing values based on the surrounding trends and patterns. This approach is more feasible to use when your missing values are not scattered too much.

Now that we know the difference between these techniques, let’s discuss some of the interpolation methods available in Pandas, then I will walk you through an example. After which I will share some tips to help you choose the right interpolation technique.

Types of Interpolation Methods in Pandas

Pandas offers various interpolation methods (‘linear’, ‘time’, ‘index’, ‘values’, ‘pad’, ‘nearest’, ‘zero’, ‘slinear’, ‘quadratic’, ‘cubic’, ‘barycentric’, ‘krogh’, ‘polynomial’, ‘spline’, ‘piecewise_polynomial’, ‘from_derivatives’, ‘pchip’, ‘akima’, ‘cubicspline’) that you can access using the interpolate() function. The syntax of this method is as follows:

DataFrame.interpolate(method='linear', **kwargs, axis=0, limit=None, inplace=False, limit_direction=None, limit_area=None, downcast=_NoDefault.no_default, **kwargs)

I know these are a lot of methods, and I don’t want to overwhelm you. So, we will discuss a few of them that are commonly used:

- Linear Interpolation: This is the default method, which is computationally fast and simple. It connects the known data points by drawing a straight line, and this line is used to estimate the missing values.

- Time Interpolation: Time-based interpolation is useful when your data is not evenly spaced in terms of position but is linearly distributed over time. For this, your index needs to be a datetime index, and it fills in the missing values by considering the time intervals between the data points.

- Index Interpolation: This is similar to time interpolation, where it uses the index value to calculate the missing values. However, here it does not need to be a datetime index but needs to convey some meaningful information like temperature, distance, etc.

- Pad (Forward Fill) and Backward Fill Method: This refers to copying the already existent value to fill in the missing value. If the direction of propagation is forward, it will forward fill the last valid observation. If it’s backward, it uses the next valid observation.

- Nearest Interpolation: As the name suggests, it uses the local variations in the data to fill in the values. Whatever value is nearest to the missing one will be used to fill it in.

- Polynomial Interpolation: We know that real-world datasets are mainly non-linear. So this function fits a polynomial function to the data points to estimate the missing value. You will also need to specify the order for this (e.g., order=2 for quadratic).

- Spline Interpolation: Don’t be intimidated by the complex name. A spline curve is formed using piecewise polynomial functions to connect the data points, resulting in a final smooth curve. You will note that the interpolate function also has

piecewise_polynomialas a separate method. The difference between the two is that the latter does not ensure continuity of the derivatives at the boundaries, meaning it can take more abrupt changes.

Enough theory; let’s use the Airline Passengers dataset, which contains monthly passenger data from 1949 to 1960 to see how interpolation works.

Code Implementation: Airline Passenger Dataset

We will introduce some missing values in the Airline Passenger Dataset and then interpolate them using one of the above techniques.

Step 1: Making Imports & Loading Dataset

Import the basic libraries as mentioned below and load the CSV file of this dataset into a DataFrame using the pd.read_csv function.

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

# Load the dataset

url = "https://raw.githubusercontent.com/jbrownlee/Datasets/master/airline-passengers.csv"

df = pd.read_csv(url, index_col="Month", parse_dates=['Month'])

parse_dates will convert the ‘Month’ column to a datetime object, and index_col sets it as the DataFrame’s index.

Step 2: Introduce Missing Values

Now, we will randomly select 15 different instances and mark the ‘Passengers’ column as np.nan, representing the missing values.

# Introduce missing values

np.random.seed(0)

missing_idx = np.random.choice(df.index, size=15, replace=False)

df.loc[missing_idx, 'Passengers'] = np.nan

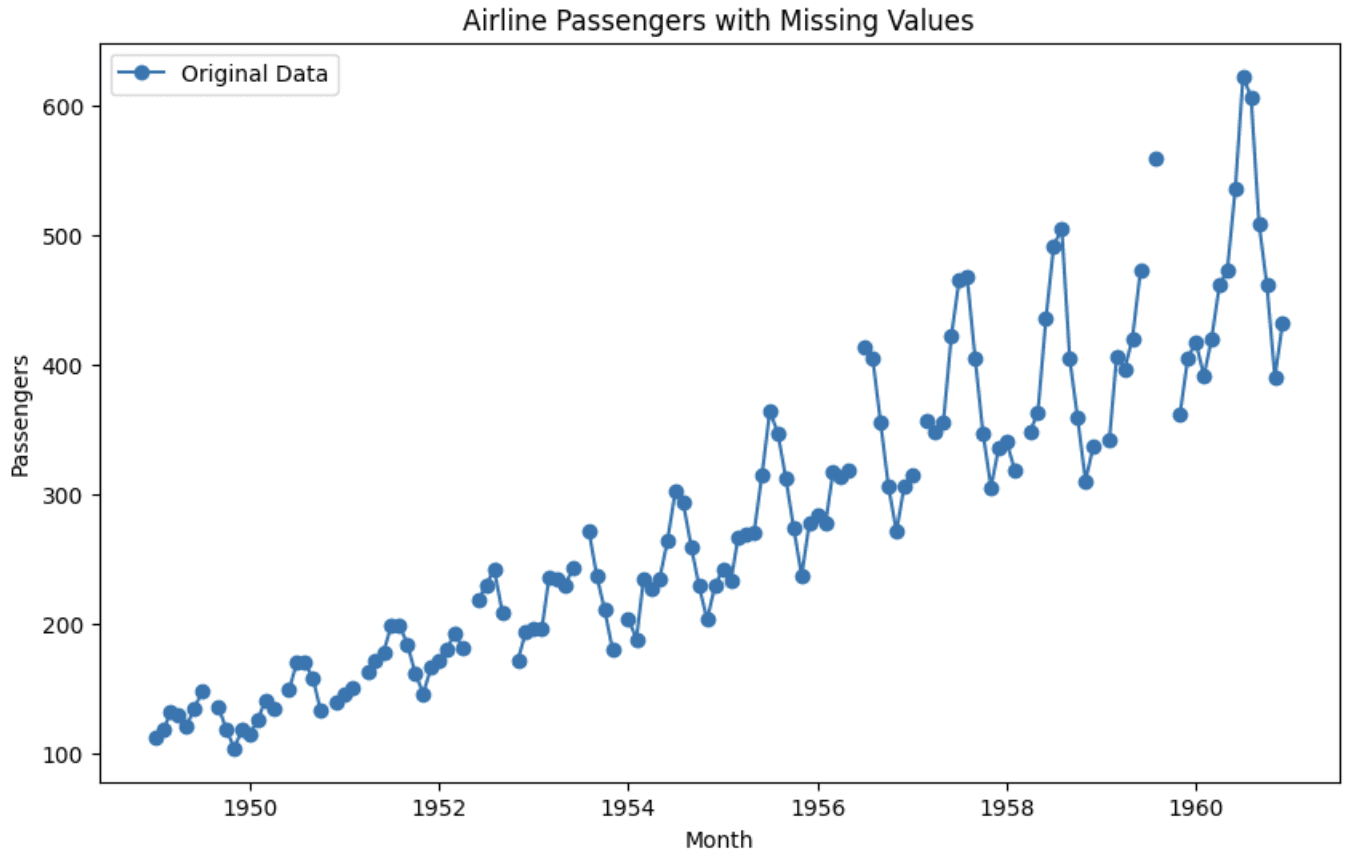

Step 3: Plotting Data with Missing Values

We will use Matplotlib to visualize how our data looks after introducing 15 missing values.

# Plot the data with missing values

plt.figure(figsize=(10,6))

plt.plot(df.index, df['Passengers'], label="Original Data", linestyle="-", marker="o")

plt.legend()

plt.title('Airline Passengers with Missing Values')

plt.xlabel('Month')

plt.ylabel('Passengers')

plt.show()

Graph of original dataset

You can see that the graph is split in between, showing the absence of values at those locations.

Step 4: Using Interpolation

Though I will share some tips later to help you pick the right interpolation technique, let’s focus on this dataset. We know that it is time-series data, but since the trend doesn’t seem to be linear, simple time-based interpolation that follows a linear trend doesn’t fit well here. We can observe some patterns and oscillations along with linear trends within a small neighborhood only. Considering these factors, spline interpolation will work well here. So, let’s apply that and check how the visualization turns out after interpolating the missing values.

# Use spline interpolation to fill in missing values

df_interpolated = df.interpolate(method='spline', order=3)

# Plot the interpolated data

plt.figure(figsize=(10,6))

plt.plot(df_interpolated.index, df_interpolated['Passengers'], label="Spline Interpolation")

plt.plot(df.index, df['Passengers'], label="Original Data", alpha=0.5)

plt.scatter(missing_idx, df_interpolated.loc[missing_idx, 'Passengers'], label="Interpolated Values", color="green")

plt.legend()

plt.title('Airline Passengers with Spline Interpolation')

plt.xlabel('Month')

plt.ylabel('Passengers')

plt.show()

Graph after interpolation

We can see from the graph that the interpolated values complete the data points and also preserve the pattern. It can now be used for further analysis or forecasting.

Tips for Choosing the Interpolation Method

This bonus part of the article focuses on some tips:

- Visualize your data to understand its distribution and pattern. If the data is evenly spaced and/or the missing values are randomly distributed, simple interpolation techniques will work well.

- If you observe trends or seasonality in your time series data, using spline or polynomial interpolation is better to preserve these trends while filling in the missing values, as demonstrated in the example above.

- Higher-degree polynomials can fit more flexibly but are prone to overfitting. Keep the degree low to avoid unreasonable shapes.

- For unevenly spaced values, use indexed-based methods like index, and time to fill gaps without distorting the scale. You can also use backfill or forward-fill here.

- If your values do not change frequently or follow a pattern of rising and falling, using the nearest valid value also works well.

- Test different methods on a sample of the data and evaluate how well the interpolated values fit versus actual data points.

If you want to explore other parameters of the `dataframe.interpolate` method, the Pandas documentation is the best place to check it out: Pandas Documentation.

Kanwal Mehreen Kanwal is a machine learning engineer and a technical writer with a profound passion for data science and the intersection of AI with medicine. She co-authored the ebook “Maximizing Productivity with ChatGPT”. As a Google Generation Scholar 2022 for APAC, she champions diversity and academic excellence. She’s also recognized as a Teradata Diversity in Tech Scholar, Mitacs Globalink Research Scholar, and Harvard WeCode Scholar. Kanwal is an ardent advocate for change, having founded FEMCodes to empower women in STEM fields.