Image created with DALL-E3

Artificial Intelligence has been a complete revolution in the tech world.

Its ability to mimic human intelligence and perform tasks that were once considered solely human domains still amazes most of us.

However, no matter how good these late AI leap forwards have been, there’s always room for improvement.

And this is precisely where prompt engineering kicks in!

Enter this field that can significantly enhance the productivity of AI models.

Let’s discover it all together!

Prompt engineering is a fast-growing domain within AI that focuses on improving the efficiency and effectiveness of language models. It’s all about crafting perfect prompts to guide AI models to produce our desired outputs.

Think of it as learning how to give better instructions to someone to ensure they understand and execute a task correctly.

Why Prompt Engineering Matters

- Enhanced Productivity: By using high-quality prompts, AI models can generate more accurate and relevant responses. This means less time spent on corrections and more time leveraging AI’s capabilities.

- Cost Efficiency: Training AI models is resource-intensive. Prompt engineering can reduce the need for retraining by optimizing model performance through better prompts.

- Versatility: A well-crafted prompt can make AI models more versatile, allowing them to tackle a broader range of tasks and challenges.

Before diving into the most advanced techniques, let’s recall two of the most useful (and basic) prompt engineering techniques.

Sequential Thinking with “Let’s think step by step”

Today it is well-known that LLM models’ accuracy is significantly improved when adding the word sequence “Let’s think step by step”.

Why… you might ask?

Well, this is because we are forcing the model to break down any task into multiple steps, thus making sure the model has enough time to process each of them.

For instance, I could challenge GPT3.5 with the following prompt:

If John has 5 pears, then eats 2, buys 5 more, then gives 3 to his friend, how many pears does he have?

The model will give me an answer right away. However, if I add the final “Let’s think step by step”, I am forcing the model to generate a thinking process with multiple steps.

Few-Shot Prompting

While the Zero-shot prompting refers to asking the model to perform a task without providing any context or previous knowledge, the few-shot prompting technique implies that we present the LLM with a few examples of our desired output along with some specific question.

For example, if we want to come up with a model that defines any term using a poetic tone, it might be quite hard to explain. Right?

However, we could use the following few-shot prompts to steer the model in the direction we want.

Your task is to answer in a consistent style aligned with the following style.

<user>: Teach me about resilience.

<system>: Resilience is like a tree that bends with the wind but never breaks.

It is the ability to bounce back from adversity and keep moving forward.

<user>: Your input here.

If you have not tried it out yet, you can go challenge GPT.

However, as I am pretty sure most of you already know these basic techniques, I will try to challenge you with some advanced techniques.

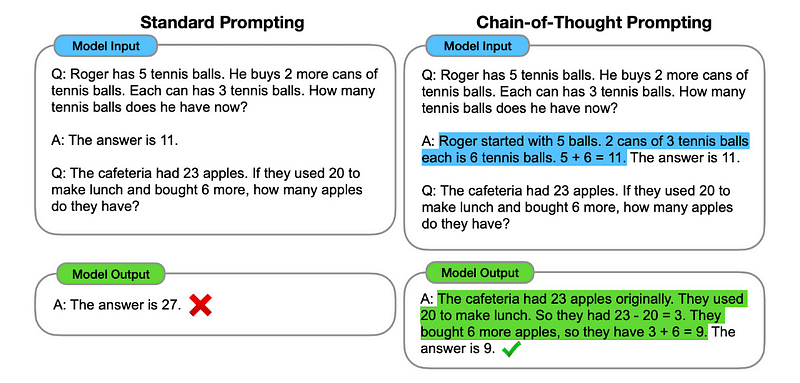

1. Chain of Thought (CoT) Prompting

Introduced by Google in 2022, this method involves instructing the model to undergo several reasoning stages before delivering the ultimate response.

Sounds familiar right? If so, you are totally right.

It is like merging both Sequential Thinking and Few-Shot Prompting.

How?

Essentially, CoT prompting directs the LLM to process information sequentially. This means we exemplify how to solve a first problem with multiple steps reasoning and then send to the model our real task, expecting it to emulate a comparable chain of thought when responding to the actual query we want it to solve.

Image taken from the paper.

So, following the very first example, we could provide a prompt with the first problem and a sequential explanation of how to solve it. Then, we can send our input and see how magic happens!

<user> If John has 5 pears, then eats 2, buys 5 more, then gives 3 to his friend, how many pears does he have? Let’s think step by step.

<agent> Let’s go step by step. John starts with 5 pears. He eats 2 pears, so he has 5–2 = 3 pears left. Then, he buys 5 more pears. So, he now has 3 + 5 = 8 pears. Finally, he gives 3 pears to his friend. Therefore, he has 8–3 = 5 pears left. So, after all these steps, John has 5 pears.

<user> Your input here.

However, most of you must be thinking… Do I have to think of a sequential way to solve any problem any time I want to ask something on ChatGPT?

Well… you are not the first one! And this leads us to…

2. Automatic Chain-of-Thought (Auto-CoT)

In 2022, Zhang and colleagues introduced a method to avoid this manual process. There are two main reasons to avoid any manual task:

- It can be boring.

- It can yield bad results – for instance, when our mental process is wrong.

They suggested using LLMs combined with the “Let’s think step by step” prompt to sequentially produce reasoning chains for each demonstration.

This means asking ChatGPT how to solve any problem sequentially and then using this very same example to train it how to solve any other problem.

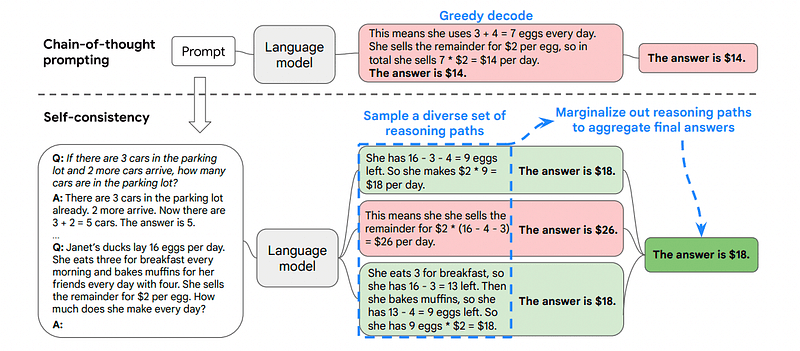

3. Self-Consistency

Self-consistency is another interesting prompting technique that aims to improve the chain of thought prompting for more complex reasoning problems.

So… what’s the main difference?

The main idea under Self-Consistency is being aware that we can train the model with a wrong example. Just imagine I solve the previous problem with a wrong mental process:

<User> If John has 5 pears, then eats 2, buys 5 more, then gives 3 to his friend, how many pears does he have? Let’s think step by step.

<Agent> Start with 5 pears. John eats 2 pears. Then, he gives 3 pears to his friend. These actions can be combined: 2 (eaten) + 3 (given) = 5 pears in total affected. Now, subtract the total pears affected from the initial 5 pears: 5 (initial) – 5 (affected) = 0 pears left.

Then, any other task I send to the model will be wrong.

This is why Self-Consistency involves sampling from various reasoning paths, each of them containing a chain of thought, and then letting the LLM choose the best and most consistent path to solve the problem.

Image taken from the paper

In this case, and following the very first example again, we can show the model different ways of solving the problem.

<User> If John has 5 pears, then eats 2, buys 5 more, then gives 3 to his friend, how many pears does he have?

<Agent> Start with 5 pears. John eats 2 pears, leaving him with 5–2 = 3 pears. He buys 5 more pears, which brings the total to 3 + 5 = 8 pears. Finally, he gives 3 pears to his friend, so he has 8–3 = 5 pears left.

<User> If John has 5 pears, then eats 2, buys 5 more, then gives 3 to his friend, how many pears does he have?

<Agent> Start with 5 pears. He then buys 5 more pears. John eats 2 pears now. These actions can be combined: 2 (eaten) + 5 (bought) = 7 pears in total. Subtract the pear that Jon has eaten from the total amount of pears 7 (total amount) – 2 (eaten) = 5 pears left.

<User> Your input here.

And here comes the last technique.

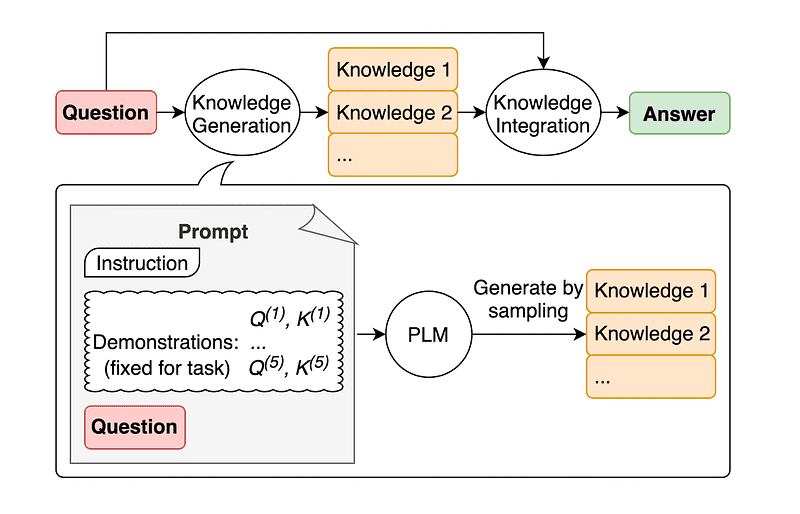

4. General Knowledge Prompting

A common practice of prompt engineering is augmenting a query with additional knowledge before sending the final API call to GPT-3 or GPT-4.

According to Jiacheng Liu and Co, we can always add some knowledge to any request so the LLM knows better about the question.

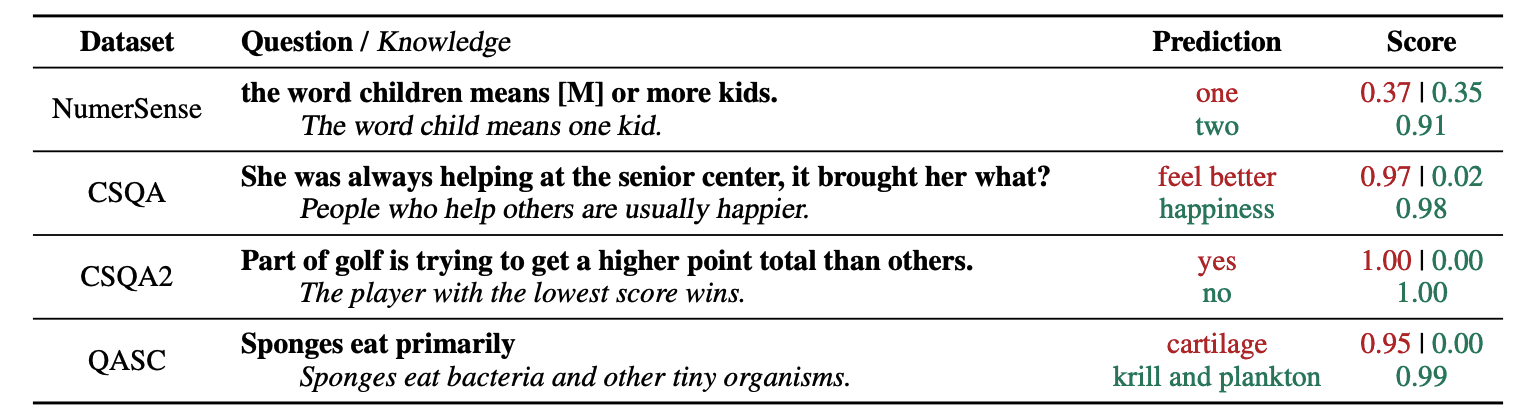

Image taken from the paper.

So for instance, when asking ChatGPT if part of golf is trying to get a higher point total than others, it will validate us. But, the main goal of golf is quite the opposite. This is why we can add some previous knowledge telling it “The player with the lower score wins”.

So.. what’s the funny part if we are telling the model exactly the answer?

In this case, this technique is used to improve the way LLM interacts with us.

So rather than pulling supplementary context from an outside database, the paper’s authors recommend having the LLM produce its own knowledge. This self-generated knowledge is then integrated into the prompt to bolster commonsense reasoning and give better outputs.

So this is how LLMs can be improved without increasing its training dataset!

Prompt engineering has emerged as a pivotal technique in enhancing the capabilities of LLM. By iterating and improving prompts, we can communicate in a more direct manner to AI models and thus obtain more accurate and contextually relevant outputs, saving both time and resources.

For tech enthusiasts, data scientists, and content creators alike, understanding and mastering prompt engineering can be a valuable asset in harnessing the full potential of AI.

By combining carefully designed input prompts with these more advanced techniques, having the skill set of prompt engineering will undoubtedly give you an edge in the coming years.

Josep Ferrer is an analytics engineer from Barcelona. He graduated in physics engineering and is currently working in the Data Science field applied to human mobility. He is a part-time content creator focused on data science and technology. You can contact him on LinkedIn, Twitter or Medium.